Back

Katherine Peng

2026

Designing a compliance-grade RAG system medical consultants can trust

What I delivered

This work started with an over-scoped “full RAG platform” vision. Research showed consultants care less about AI layers and more about trust, ownership, and evidence control. I turned that into a shippable MVP and a scalable architecture.

Defined a feasible MVP: admin-managed hub + local PDF/DOC retrieval + citation-first answers (no connectors).

Designed the AI behaviour contract for multi-turn scenarios: uncertainty handling, “no evidence” responses, conflict handling, and scoped hub context.

Solved a key trust constraint: citations remain useful even when users cannot open original files due to cross-team restrictions.

Established a quality bar using 7 research sessions: scenario set + rubric for grounded answers and evidence usability.

Mapped the 6–12 month path: governance/RBAC → SharePoint/Egnyte connectors → orchestration & artifacts.

Context

Consultants need fast answers, but every claim must be defensible. In compliance-grade work, a fluent answer without evidence is unusable. The system must make it clear what is known, what is supported, and what is restricted.

Constraints & feasibility (MVP reality)

Admin uploads documents into hubs (MVP).

Inputs limited to PDF/DOC.

Per-hub retrieval scope to avoid cross-domain leakage.

Up to 50 files per folder (limit).

File size: up to 100MB per file (configurable / pending engineering confirmation).

Access requests handled via email to hub admin (MVP).

Some users may see citations but cannot open source files due to policy separation (Medical vs Marketing).

Design implication: evidence UX must degrade gracefully under permissions.

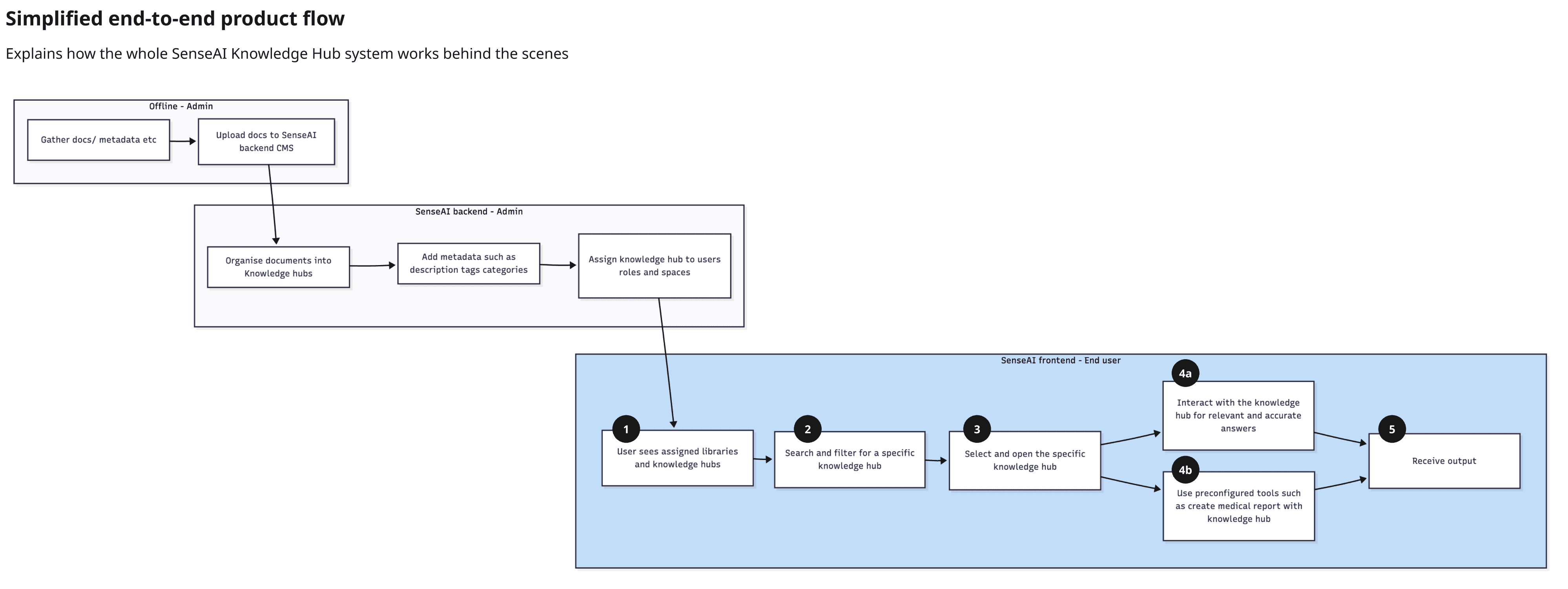

System snapshot (RAG pipeline behind the scenes)

Admin uploads docs → indexing and metadata

User selects hub → asks question

System checks access → retrieves relevant chunks → generates grounded answer

UI returns answer + citations into a side panel

User verifies evidence; restricted sources show snippet/highlight + “request access” path

Key design-architecture decisions

Decision: Per-hub scope (MVP)

Why: prevents leakage, keeps answers defensible

Trade-off: cross-hub reasoning deferred

Decision: Citation-first response with evidence panel

Why: trust is the core adoption gate

Trade-off: more UI/system complexity than “chat”

Decision: Restricted-source behaviour as first-class UX

Why: policy blocks original files for some users

Trade-off: must design clear messaging and access request flow

Decision: Admin-managed hub in MVP

Why: fastest way to ship with governance

Trade-off: self-serve hub ownership deferred to platform phase

AI behaviour spec (multi-turn)

If retrieval finds no relevant evidence: ask clarifying question or recommend what to upload; do not guess.

If evidence is weak: answer + explicit limits + show what evidence was used.

If evidence conflicts: compare sources and surface disagreement.

Always cite; if not supported by sources, label as assumption.

Trust patterns: citations + restricted access

Normal state: title + snippet + highlights + link to source

Restricted state: title + snippet + highlights + “Restricted — request access via admin email”

Principle: evidence should remain inspectable even when source files are not accessible.

Evaluation (7 sessions → what “good” means)

Top recurring questions:

Will it hallucinate?

Who owns the hub?

Can I manage my own hub?

Can I see original files?

Can I switch hubs in one chat?

Quality metrics:

Task success with usable evidence

Citation usefulness

Trust (“I’d use this in a report”)

Access clarity (users understand restrictions)

Hallucination flags

Roadmap (MVP → Platform)

Now: Admin-managed local retrieval hubs (PDF/DOC), citations, restricted-source behaviour, email access requests

Next: Governance surfaced in UI (roles/RBAC), hub lifecycle, versioning/freshness

Later: SharePoint/Egnyte connectors, orchestration flows (evidence pack / report drafting), monitoring and evaluation

Impact & takeaways

This work turned “RAG as a feature” into “RAG as an evidence system.” We shipped an MVP definition that’s feasible under real constraints (admin-managed hubs, PDF/DOC only) while establishing the behaviour contract and trust patterns needed to scale. The long-term platform path is clear: governance in-product → connectors (SharePoint/Egnyte) → orchestration (evidence packs and report drafting) with measurable quality signals.

__________________

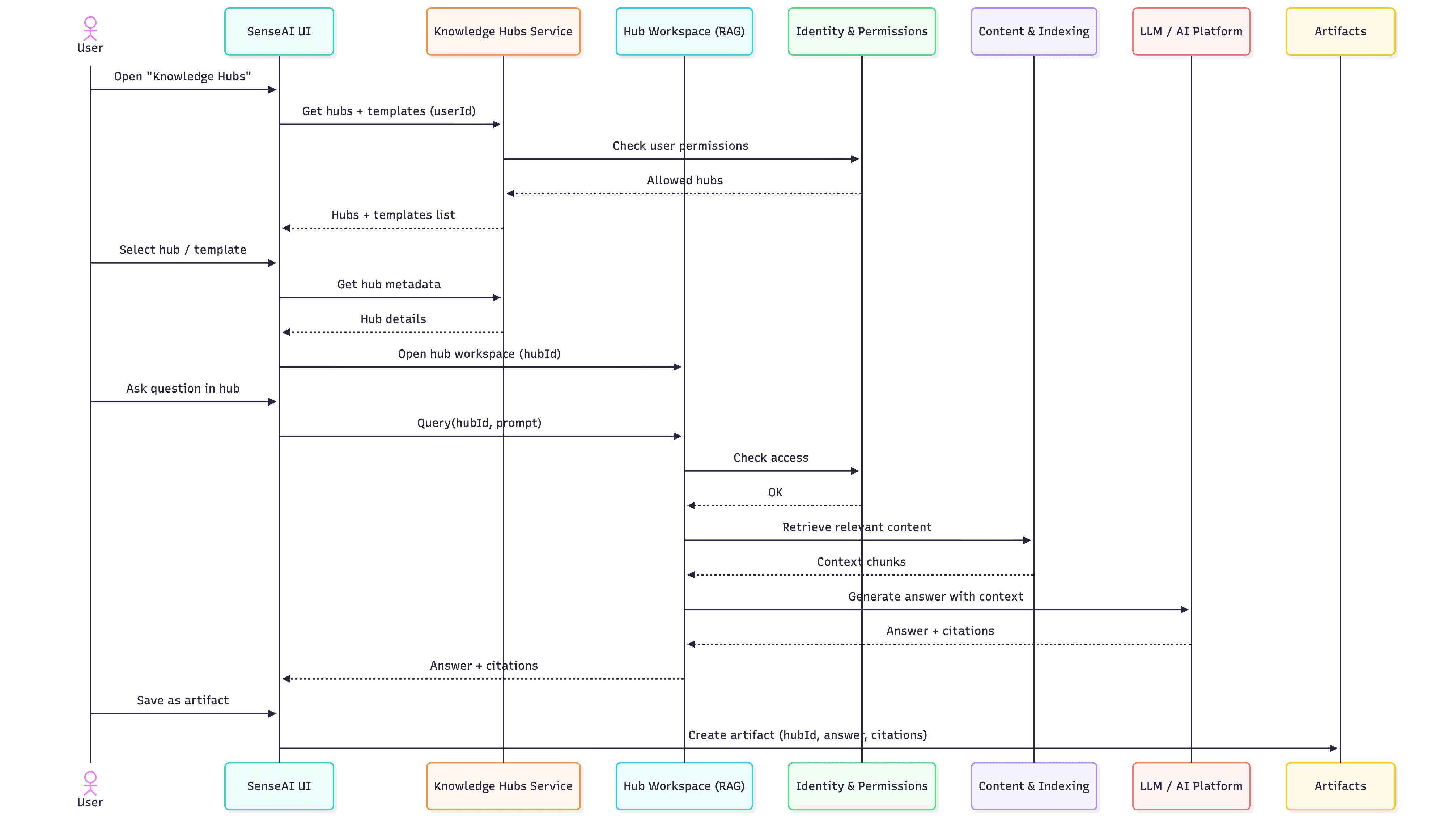

Appendix — Service Sequence Diagram (MVP)

This sequence diagram documents the exact order of API calls, permission checks, and system interactions from “Open Knowledge Hubs” to “Answer + Citations” and “Save as Artifact.” It was used to validate feasibility and identify failure points early.